Page 1 of 2

Gather data: GPU Projects with 20000+ atoms,

Posted: Sat Apr 07, 2012 6:29 pm

by bruce

People have observed reduced PPD and reduced GPU utilization on the new projects with high atom-counts.

viewtopic.php?f=74&t=21180

viewtopic.php?f=74&t=21208

I've alluded to suspicions that the PCIe bus may be the limiting factor for these projects rather than the compute speed of the GPU. See

Subject: TPF is WAY long -- P7641 for one such example.

Please report your project number, GPU model,

and the characteristics of the bus interconnecting it with Main RAM, and any observed changes in PPD or GPU utilization from what you've seen on smaller projects.

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Sat Apr 07, 2012 10:37 pm

by *hondo*

I can tell you I'm Folding two WUs with an i5 & a 580GTX

Sorry I know nothing about the following

and the characteristics of the bus interconnecting it with Main RAM,

Edit & updated information

System Specification

Case: Fractal Design Define R3 Black

Power Supply: OCZ ZS 750w PSU

CPU: Intel Core i5 2500K 3.30GHz Sandybridge overclocked to 4.40GHz

Motherboard: Intel Z68 (Socket 1155) DDR3 Motherboard ** B3 REVISION ** = Motherboard Gigabyte GA-Z68AP-D3

Cooler: Xigmatek Dark Knight

RAM: 4GB DDR3 1600MHz Dual Channel Kit

Hard Drive: Western Digital Caviar Blue 500GB SATA 6GB/s

Graphics Card: Gainward 586 PCI-e

Sound: Realtek 7.1 Channel Sound (On-Board) NOT USED Audio thru Creative Labs PCI

Optical Drive: LG DVD+/-RW SATA Drive

Other than both WUs came off the same PC / GPU

Edit More info that doesn't mean too much to me

Well this is what I go off, with a difference of plus 5k PPD and more to the point these Wus ran consecutively from the same PC

In the case of P 7200 fahmon doesn’t in both cases take into account any score from them, because there is no information available in the WU summary

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Sun Apr 08, 2012 2:33 am

by Ripper36

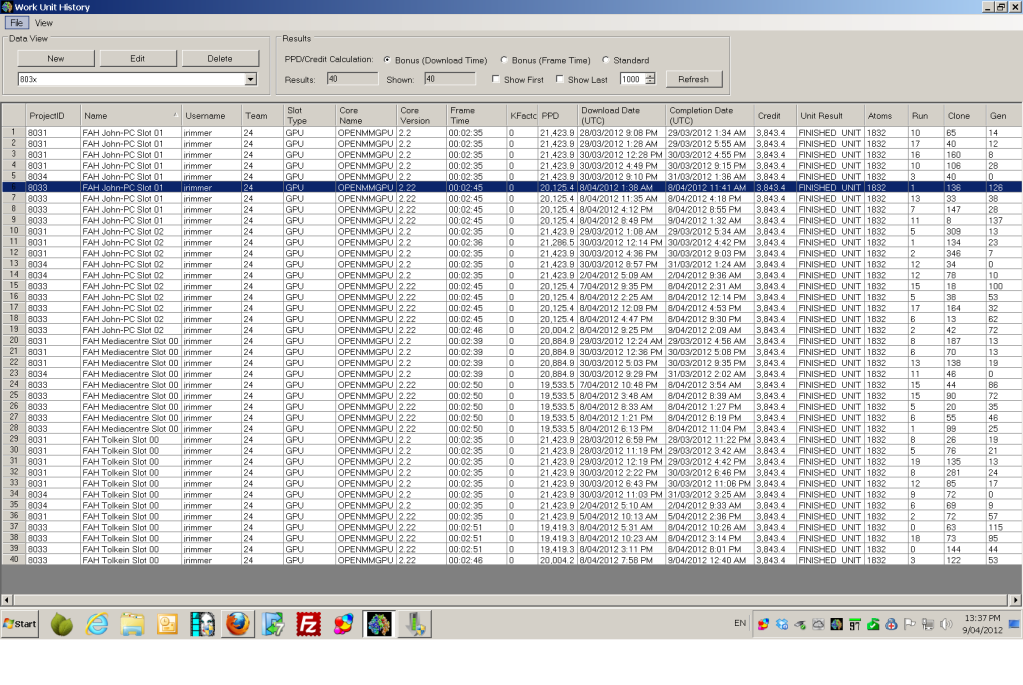

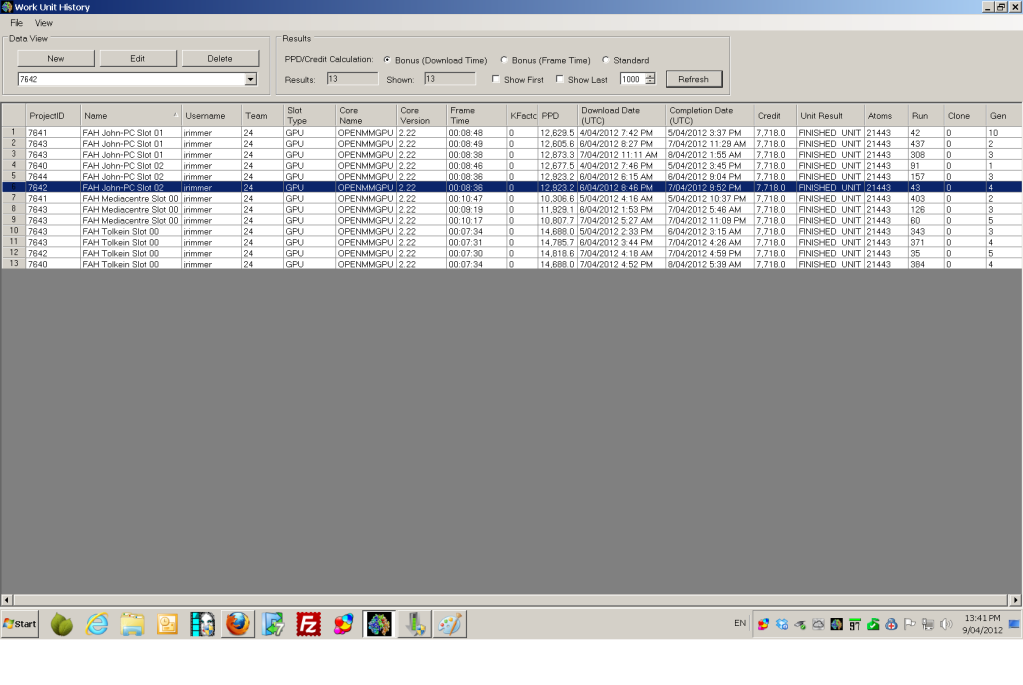

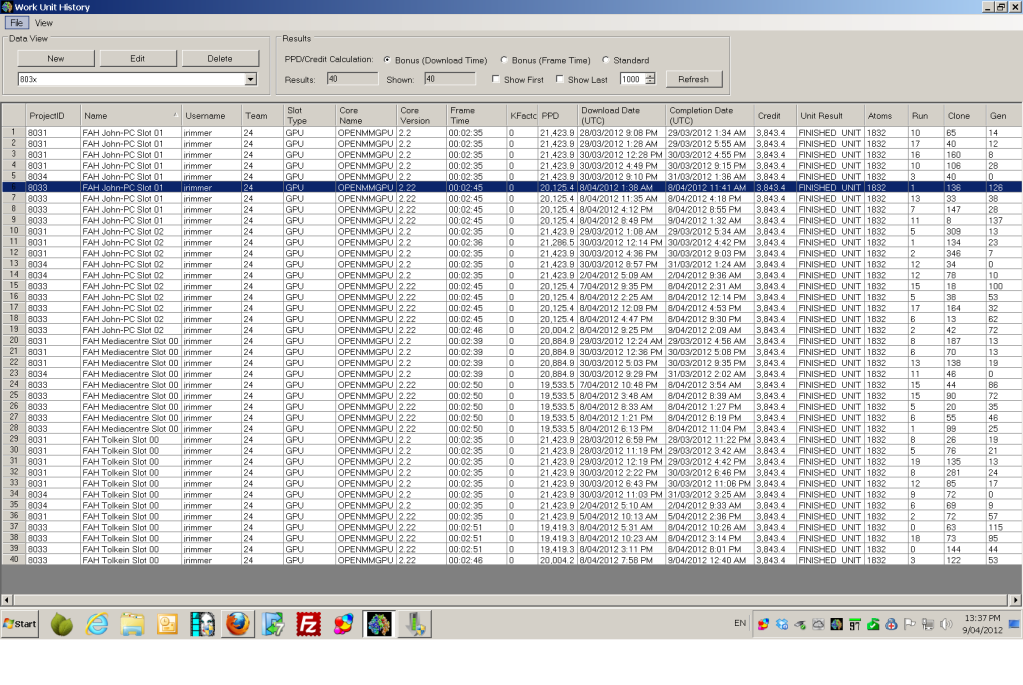

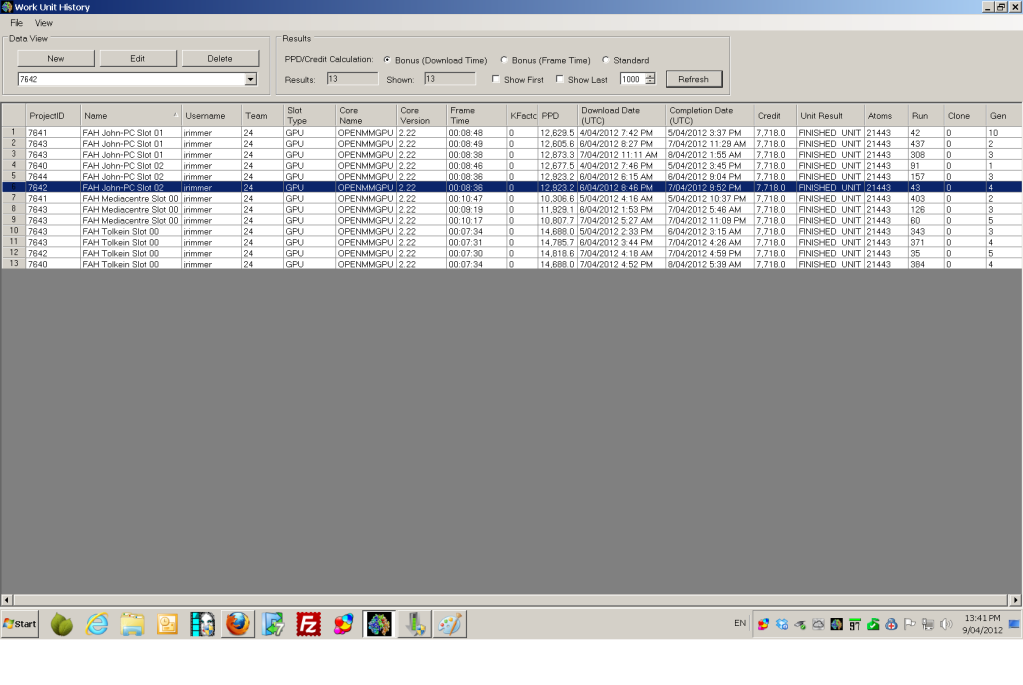

I can post data on forty project 803x (1832 atoms) results, and on thirteen 764x (21433 atoms) projects. There is a dramatic difference.

I am folding on four NVIDIA GTX570OC cards

- two on JOHN-PC

- one on TOLKEIN

- one on MEDIACENTRE

The thing that stands out, supporting your hypothesis, is that TOLKEIN which a Z68 chipset on x16 PCI-E slot is so much faster than JOHN-PC-1 and JOHN-PC-2 running x8, when they were both equivalent on 8031 units. Mediacentre is slower again, on a PCI-E 1.1 interface.

There is also a difference in drivers though I'm not sure that's rerlevant to the results.

Interested in your comments

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Sun Apr 08, 2012 11:16 am

by Nathan_P

I've taken this from a thread over at [H] where a teammate has the same problem

But jeez stanford..... PPD is a mear 25% of what my cards could get on other units. 25%! seriously?! I know you think GPU is over-valued but no warning? just cut PPD to 1/4?

anyhow, here are some details

core 15

Project: 7643 (Run 426, Clone 0, Gen 1)

Project: 7642 (Run 455, Clone 0, Gen 0)

Project: 7641 (Run 151, Clone 0, Gen 2)

each worth 7718 points

570 getting TPF of: ~21 minutes, about 5250 PPD

460 getting TPF of: ~53 minutes, about 2075 PPD

The 570's are in a: EVGA x58 Classified SLI, Intel i7 970 @ 4.15 GHz, Corsair H70, 12GB DDR3-1600 CAS 8, EVGA GTX570 SLI @ 825/1650/1950 and the 460 is in a: ASUS ROG GENE-Z, Intel i7 2600K @ 4.0, Antek Khuler 920, 4GB DDR3-2133 CAS 9, Galaxy GTX460.

He's confirmed that all the cards are running at x16 speed.

Not sure if it helps any but i'm interested in further thoughts on this

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Sun Apr 08, 2012 10:52 pm

by GreyWhiskers

From

my other post, here's the pivot table that goes along with it. The last section has the large, >20K, projects.

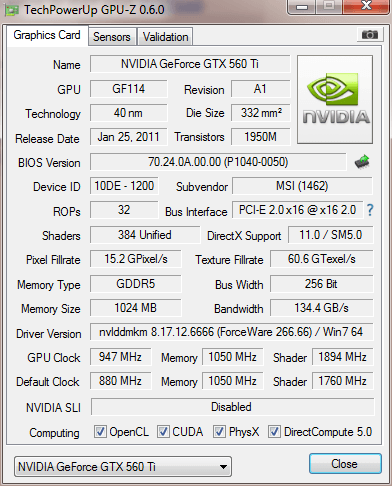

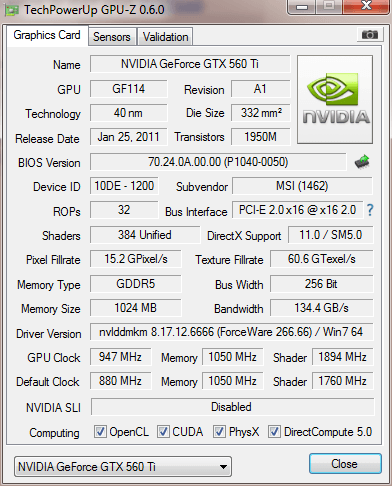

The GPU-Z pic above shows my current memory bandwidth at the O/C I'm running (947 MHz)

[edit]corrected clock rate

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Mon Apr 09, 2012 1:51 am

by Ripper36

Wow - I must get that app GPU-Z and update my post.

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Mon Apr 09, 2012 2:14 am

by GreyWhiskers

Once you get it, the little camera icon just under the red X close button will take a screenshot of the GPU-z screen and upload it to GPU-z's servers and giving you a link to the image. One stop shopping.

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Mon Apr 09, 2012 2:38 am

by Ripper36

Thanks - my post has now been edited and upgraded!

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Mon Apr 09, 2012 5:53 am

by Jesse_V

My 560TI is working away on project 7641, which has 21,443 atoms and according to GPU-Z is at 89-90% GPU load. V7 tells me that its pulling in 11k PPD, which is below the normal of 14k PPD. As I'm working on this post it finished it off and picked up a 562-atom 7612, and its working away at 99% usage with 14k PPD again, just as I'd expect. So there is a difference. I have a Gigabyte P67X-UD3-B3 (Socket 1155) motherboard, and my PCI has a bus width of 32-bits according to Piriform Speccy, and I only have a single GPU. I have 8 GB of RAM and they're pretty fast.

GPU-Z gives more information about the GPU's Bus Interface: "PCI-E 2.0 x16 @ x8 2.0" which of course matches GreyWhiskers's above.

Let me know if there's any further system specs you need.

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Mon Apr 09, 2012 6:57 am

by GreyWhiskers

Jesse_V wrote: "PCI-E 2.0 x16 @ x8 2.0" which of course matches GreyWhiskers's above.

Let me know if there's any further system specs you need.

Check your GPU-Z again -- you said, "PCI-E 2.0 x16 @

x8 2.0", while my screenshot above shows "PCI-E 2.0 x16 @

x16 2.0"

Tom's Hardware forum has a similar situation from a couple of years ago --

PCIe Bandwidth issues.. Showing x8 width on a single x16 slot. It may or may not apply...Which PCI-E slot the board is installed in, etc.

Switching from x8 to x16 on that GTX 260 probably won't make any noticeable difference. However, you might look into your BIOS settings and make sure there is not a setting that's forcing your PCI-E slot to run x8. You could also try updating to the latest driver versions for your GPU and motherboard chipset to be sure there's no updates needed there. (could also check for BIOS updates)

Do you have another graphics card installed on a second GPU slot? (For instance, a second card for PhysX?)

Is this your motherboard?

GA-MA490X-UD4

http://www.gigabyte.us/Products/Mo [...] uctID=3003

This motherboard has two PCI-E GPU lanes. One runs at 16x, and the other runs at x8. So if you have your GPU in the second lane, it will only run at 8x.

The x16 lane is the one closest to the CPU.

I've pulled out a few lines from the Gigabyte spec sheet for your MOBO.

0.1 x PCI Express x16 slot, running at x16 (PCIEX16)

* For optimum performance, if only one PCI Express graphics card is to be installed, be sure to install it in the PCIEX16 slot.

0.1 x PCI Express x16 slot, running at x8 (PCIEX8)

* The PCIEX8 slot shares bandwidth with the PCIEX16 slot. When the PCIEX8 slot is populated, the PCIEX16 slot will operate at up to x8 mode.

0.3 x PCI Express x1 slots

(All PCI Express slots conform to PCI Express 2.0 standard.)

0.2 x PCI slots

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Mon Apr 09, 2012 2:51 pm

by Jesse_V

GreyWhiskers wrote:Jesse_V wrote: "PCI-E 2.0 x16 @ x8 2.0" which of course matches GreyWhiskers's above.

Let me know if there's any further system specs you need.

Check your GPU-Z again -- you said, "PCI-E 2.0 x16 @

x8 2.0", while my screenshot above shows "PCI-E 2.0 x16 @

x16 2.0"

Tom's Hardware forum has a similar situation from a couple of years ago --

PCIe Bandwidth issues.. Showing x8 width on a single x16 slot. It may or may not apply...Which PCI-E slot the board is installed in, etc.

...

Don't tell me it's been at a reduced performance all this time for the last two months!

This is my first build, I've still got a lot to learn...

Thanks for the valuable information. The GPU is plugged into the PCI slot that is closest to the CPU, so it should be the x16 one. I'll check out the BIOS to see what's up. I checked for updates and things seemed fine; I'll keep poking around.

EDIT: Confirmed: it is seated in the x16 slot.

EDIT2: Under "Advanced BIOS Features" the "Init Display First" setting is set to "PCIE x16". I'll try to clean/reseat the GPU and see if that helps.

EDIT3: Reseating did nothing. I did however disable the "Turbo SATA3 / USB 3.0" setting in the BIOS as apparently it uses PCI bandwidth. I'm back to x16!

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Mon Apr 09, 2012 8:34 pm

by sswilson

I'll update with snippets from the code later this evening, but so far I've isolated out CPU clockspeed, and system memory speed with virtually no difference to TPF. Next up was going to be PCIe bus speed, but my system grabbed two 8XXX GPU WUs so that will have to wait.

As it stands....

Asus 990FX chipset

1090t

8G DDR3

2X 560 ti @ X16 PCIe bus (both verified via gpu-z)

CPU @ 3970 / Mem 1600 = TPF 9 min 29 seconds

CPU @ 3010 / Mem 1600 = TPF 9 Min 32 seconds

CPU @ 3970 / Mem 1K = TPF 9 Min 44 seconds

While the memory TPFs might seem to point to memory being the current bottleneck, I think the difference we're seeing is pretty typical to what we'd see on any other WU. If it were the memory interface causing low PPD on these WUs, I'd expect to see a much larger discrepancy when lowering mem speed by over 1/3 (timings remained the same).

Next possible culprits that I'll try out when I get another high atom WU will be NB speed (running @ 2400 for all above readings), and PCIe bus speed.

I was surprised to see little difference on the CPU front... I guess my initial impression that these were offloading a lot of work to the CPU was false.

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Tue Apr 10, 2012 10:00 am

by {RaW}Eagle1

Nathan_P wrote:I've taken this from a thread over at [H] where a teammate has the same problem

But jeez stanford..... PPD is a mear 25% of what my cards could get on other units. 25%! seriously?! I know you think GPU is over-valued but no warning? just cut PPD to 1/4? . . .

570 getting TPF of: ~21 minutes, about 5250 PPD

460 getting TPF of: ~53 minutes, about 2075 PPD

The 570's are in a: EVGA x58 Classified SLI, . . .

. . . He's confirmed that all the cards are running at x16 speed.

There are 3 models iof that mobo. Only one of them runs SLI at 16/16/16. (The E759). The E760 and E761 have split bandwidth on the second and third cards. Link:

http://www.evga.com/articles/00447/

Please can you confirm which one is being used in your example.

Thanks

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Tue Apr 10, 2012 10:16 am

by {RaW}Eagle1

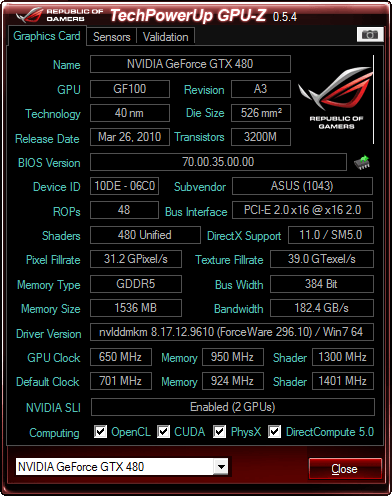

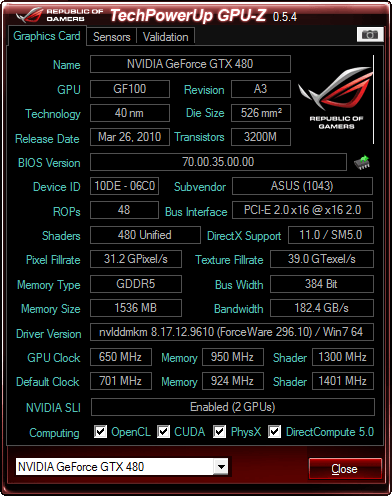

86% load

Graphics: ASUS GTX480 SLI (underclocked) 650|1300|1900 @ 16x/16x,

Mainboard: ASUS ROG Crosshair Formula V 990FX/SB950,

CPU: AMD Phenom II 1090T @ 3850MHz,

RAM: G.Skill 12800CL8 8GB @ 1920MHz 9|9|9|24

Graphics driver version: 296.10

FAH client versions: SMP 6.34 (folding on 4 of 6 cores), GPU 6.41r2 (both cards)

Re: Gather data: GPU Projects with 20000+ atoms,

Posted: Tue Apr 10, 2012 10:22 am

by HaloJones

I would suggest that cpu folding has no impact on this. I have a 460GTX running along with cpu folding and a 450GTS without cpu folding and both are impacted in the same way.